Detecting and Understanding Social Interactions

We are using machine learning methods to detect and model egocentric social interactions, with a focus on providing blind and visually-impaired users with better awareness of their social context, including arrivals/depatures, eye/head gaze, and interesting visual features, notably stature, hair style and clothing.

In particular, our work on detecting egocentric social interactions from wearable data shows that it is not so simple as detecting faces or detecting voice activity. In particular, using a combination of features derived from detected faces and voices, as well as accelerometer data, significantly outperforms any single signal (e.g.~an ROC curve of SVM-based social interaction detection on faces alone yields an AUC of 0.58 whereas on all three yields an AUC of 0.86). This can be partly explained by the intuitions that socializing does not always involve face-to-face interaction (as when walking together), nor does it always contain voice activity (as when watching some other phenomena).

To improve detection and modeling of egocentric social interactions, and to further promote this area as a problem of academic interest and immediate application, we seek to expand on our wearable datasets by constructing deidentified, multi-day, egocentric datasets of social interactions containing synchronized audio, video and motion data along with social interaction annotation and baseline results for detection and modeling. Our approach differs from existing work in that it moves beyond using visual features alone to include motion and audio, does not require specialized eye-tracking hardware. Furthermore, we focus on real-time methods with immediate applicability to individuals who are blind or visually-impaired.

Discreet Wearable Assistant

Our wearable assistant is built to help its wearer be more social by providing timely, discreet identification of nearby acquaintaces. Specific use-cases include 1) facilitating serendipitous interactions, as when the wearer is walking in a crowded hallway/sidewalk and isn't aware of a nearby acquaintance, and 2) privately identifying acquaintances that initiate an interaction in case their name is not remembered. These functions are specially helpful for individuals who are blind/low-vision or who otherwise functionally suffer from prosopagnosia, but we envision more general interest if the system is not burdensome to use, that it doesn't encumber the wearer physically or socially.

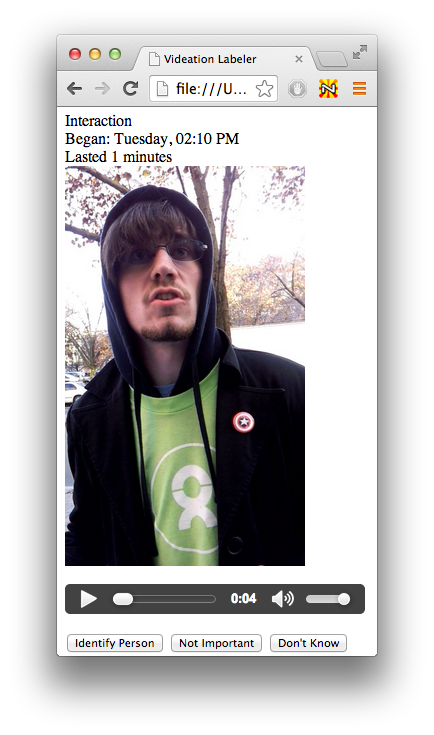

The system is designed foremost so that the wearer is not physically or socially encumbered; ideally, others aren't even aware that a wearable system is being used. Although its primary purpose is to detect and recognize nearby acquaintances (people that the wearable has been previously trained on), it's also intended to more broadly detect, model and summarize the social interaction activities of its wearer. Chiefly, this means identifying the number of people the wearer interacted with and for how long. This allows it to only query the user about substantive interactions rather than, e.g. when the wearer simply pays a barista for coffee and it also provides the wearer (and its own models) with data about typical interaction patterns.

Learning the Day’s Interactions

We are building a wearbable assistant that can learn the social interactions you engage in throughout each day and, if needed, ask you for names of people that you frequently interact with so that it can subsequently identify them to you.

Currently, queries are made of the user through an accessible, audio-driven interface that provides small, privacy-preserving snippets from each conversation--enough so that the wearer can identify with whom they were speaking, but not so much as to be intrusive.