Some previous studies has shown that zooming and panning can increase the understanding of tactile graphics. As our tactile device not only outputs raised dots it can also provide touch input(s) matrix, we can implement multi-touch touch gesture recognition activities, similar to an android phone. We can use these touch gestures to make our system more inteactive. As a very simple example, consider a student who is trying to learn about a polygon. Given the situation that only static polygon is being displayed on the tactile device it would be tough for the blind student to interpret and learn about the polygon. Now, if we add various multi-touch functionalities such as zoomin and panning, it would be easier for the blind student to learn about that polygon.

Synchronization of synthetic voice with various elements of polygon make our platform more interactive. For instance suppose if the blind student wants to go over some specific information about the polygon then he would need to just single-tap/double-tap corresponding element (Edge/Angle) of the polygon on the tactile device and synthetic voice would provide the description of what is touched.

Similarly to the above example of polygon, we can use special dynamic renderings to display and teach about tougher concepts. For example, circular waves can be displayed using vibrating concentric circles emanating outward from the center. A projectile motion can be displayed by animated patterns depicting trajectory of the projectile.

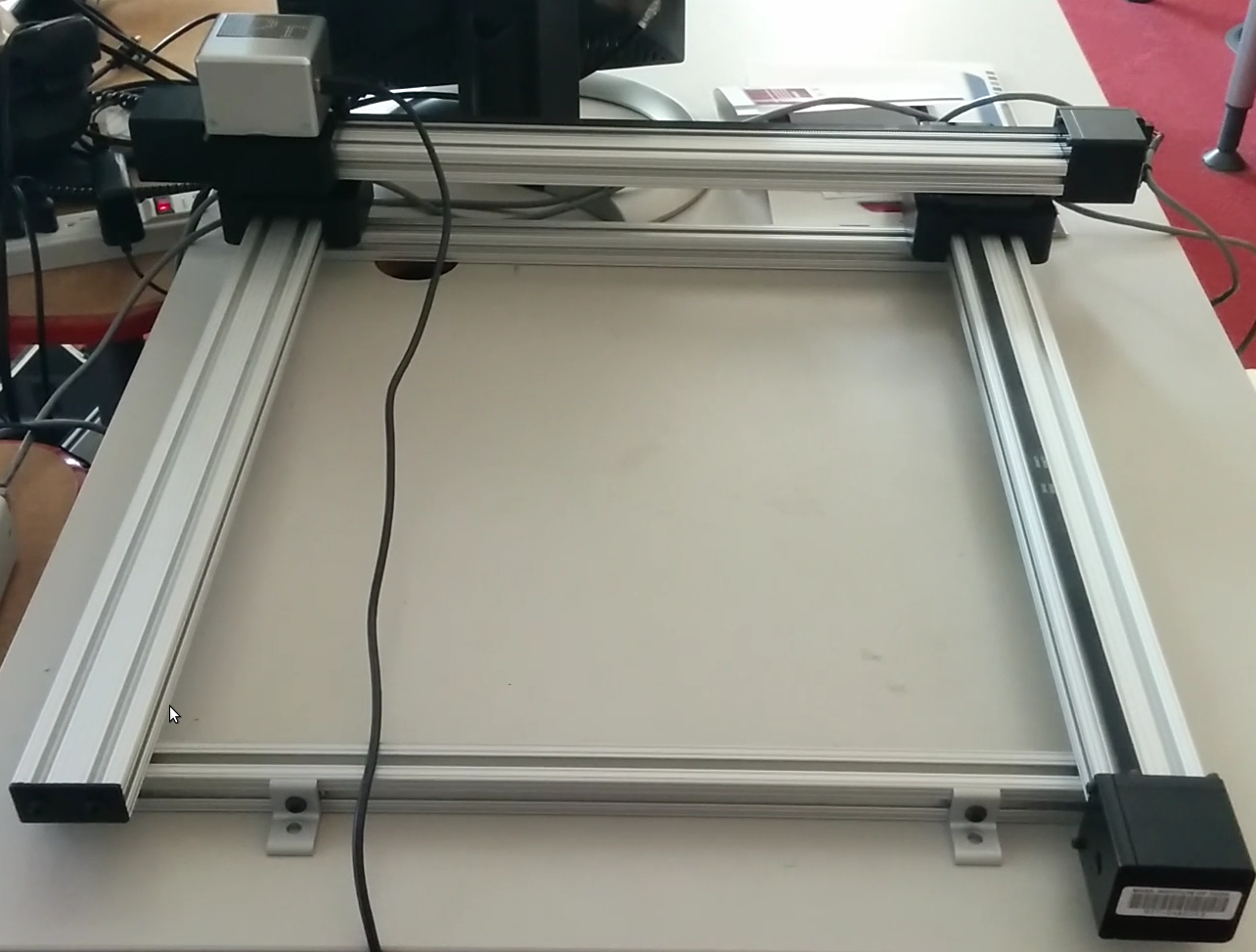

All the braille tactile devices available in the market now a days are very expensive. For exmaple, the tactile device which is available in the lab is very expensive (costs around $5000 USD) and it has very limited resolution (24X15 pins). To overcome the resolution and cost problem, we have integrated it with encoder aided mechanical XY-slider with central stage having moving capacity of 50 cm X 50 cm. Now the effective resolution of 200X200 dots. A braille device with 200X200 dots should cost around $450,000 but the overall system (XY-slider + Tactile device) now costs only around $8,000.

An important aspect of this project is to create an open-source, high-quality typesetting system for automatic trancription of STEM books into braille. Learning resources for blind people are quite sparse and trancription of STEM material is very expensive and time consuming procedure. For example, transcription of a math textbook takes around several months and it costs more than $100,000. With our auromated open-source coding platform it would be quite easy, fast and inexpensive for publisher to transcribe various STEM books. The scope of this automated transcription plateform is not only limited to STEM books but it can be used for trancription of any content/book/webpage available online. Snapshot of current version of this typesetting system is depicted below.

The ultimate goal of this project could be considered as to create Ipad kind of highly intuitive tough-capable display to help blind and visually impaired. Though we have just started developing this platform, we strongly believe that the outcome of this project could be a fundamental advance in STEM accessibility for blind and visually impaired people.

This video sequence can be downloaded here